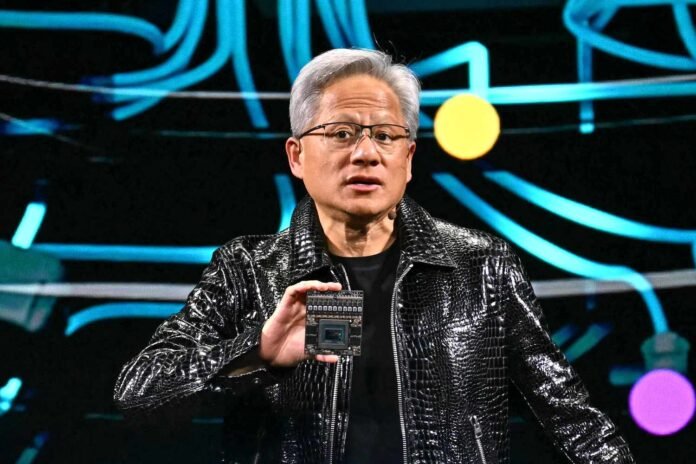

At Nvidia’s annual GTC conference, CEO Jensen Huang delivered a clear message to the tech industry: faster chips are the most effective way to reduce the costs of artificial intelligence (AI) infrastructure. During his two-hour keynote on Tuesday, Huang emphasized that the rapid advancement of Nvidia’s graphics processing units (GPUs) will address concerns about the high costs and return on investment (ROI) associated with AI development.

“Over the next 10 years, because we could see improving performance so dramatically, speed is the best cost-reduction system,” Huang told journalists after his keynote.

Nvidia, a leader in AI hardware, showcased its latest innovations, including the Blackwell Ultra systems, set to launch later this year. According to Huang, these systems could generate 50 times more revenue for data centers compared to their predecessor, the Hopper systems, due to their ability to serve AI applications to millions of users simultaneously.

Huang highlighted the economics of faster chips, explaining how they lower the cost per token—a measure of the expense to produce one unit of AI output. He noted that hyperscale cloud providers and AI companies are particularly focused on these metrics as they plan their infrastructure investments.

“The question is, what do you want for several hundred billion dollars?” Huang said, referring to the massive budgets cloud providers are allocating for AI infrastructure.

Nvidia’s Blackwell GPUs, which analysts estimate cost around $40,000 per unit, have already seen strong demand. The company revealed that the four largest cloud providers—Microsoft, Google, Amazon, and Oracle—have collectively purchased 3.6 million Blackwell GPUs. This marks a significant increase from the 1.3 million Hopper GPUs sold previously.

To address long-term planning needs, Nvidia also unveiled its roadmap for future AI chips, including the Rubin Next system slated for 2027 and the Feynman system for 2028. Huang explained that cloud providers are already designing data centers years in advance and need clarity on Nvidia’s future offerings.

“We know right now, as we speak, in a couple of years, several hundred billion dollars of AI infrastructure will be built,” Huang said. “You’ve got the budget approved. You got the power approved. You got the land.”

Huang also dismissed concerns about competition from custom AI chips developed by cloud providers, known as application-specific integrated circuits (ASICs). He argued that these chips lack the flexibility required to keep pace with rapidly evolving AI algorithms and expressed skepticism about their viability.

“A lot of ASICs get canceled,” Huang said. “The ASIC still has to be better than the best.”

Nvidia’s focus remains on delivering cutting-edge GPUs that can power the next generation of AI applications. With the Blackwell systems and future innovations, Huang is confident that Nvidia will continue to dominate the AI hardware market, enabling faster, more cost-effective AI solutions for businesses worldwide.

For more updates on technology, visit DC Brief.